The following lines are the result of collaborative work, under the leadership of Justin Seitz. There are many of us working together, including Heartbroken and Nanardon.

OSINT is an acronym for Open Source Intelligence. It’s a set of investigative techniques, allowing information to be retrieved from so-called open sources. Used by journalists, by police or in cybersecurity, OSINT can help to find information but it can also be used to protect yourself from malicious people.

Violences against people, especially against women increased and diversified. Harassment, raids, doxxing, revenge porn by video or by pictures, identity theft or school harassment, etc.

How to react? How to prevent them? Our goal is to give you simple resources, without the needs for special knowledge.

It doesn’t substitute support groups, law enforcement, health professionals or lawyers.

We trust you.

You are not responsible.

Facts and situations we will use to illustrate ours kits are criminally and civilly repressed.

You are not alone.

The information provided in this article does not, and is not intended to, constitute legal advice; instead, all information, content, and materials available in this article are for general informational purposes only. Furthermore this article was written mainly in regards to French and European laws. Readers should consult their local laws and contact an attorney to obtain advice with respect to any particular legal matter.

This is a great specialty of stalkers: making insulting or degrading photomontages and spreading them on the Web. We can find several hypotheses:

- Very crude photomontages, which are not intended to be credible;

- The filthy photomontages, in particular of a pornographic nature;

- Video montages and more particularly deepfakes.

Rough photomontages

In this case, we are talking about a photo of the victim, used for a obscene montage. There is no doubt about authenticity of pictures, we know that's a fake. We find collages of memes, for example, Pepe The Frog, combined with photos of their victims. They use images that they have found on the Web: social networks, press clippings, media passages, etc. We also note that it is often the same photomontages that circulate.

If the montages circulate on social networks, harassment can be invoked. Indeed, insofar as these photomontages are used for malicious purposes, this falls within this framework.

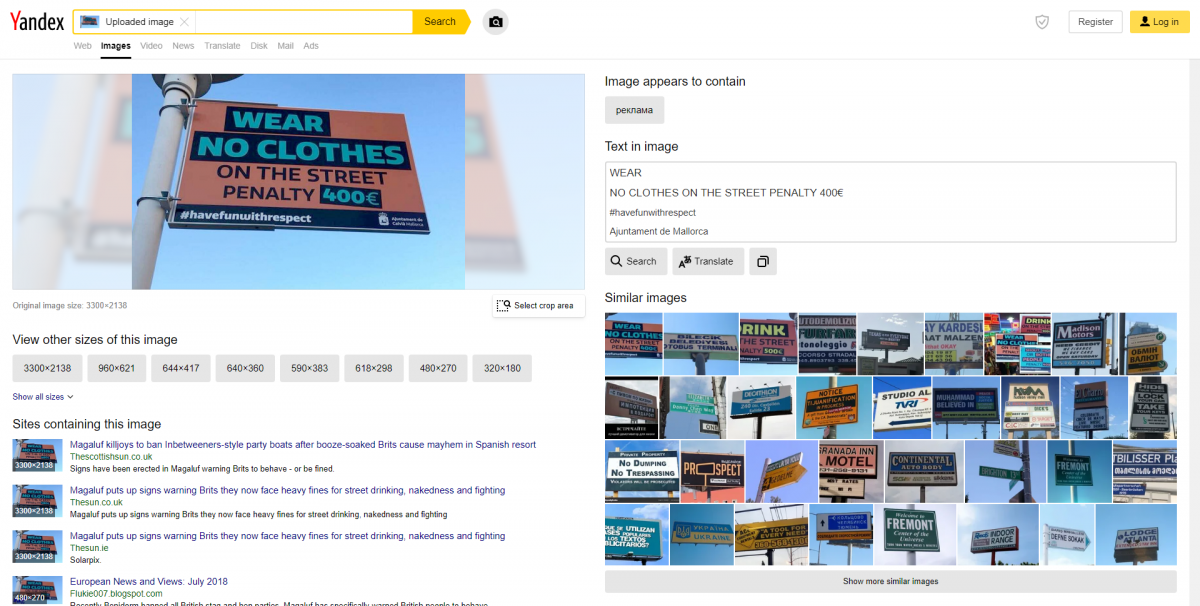

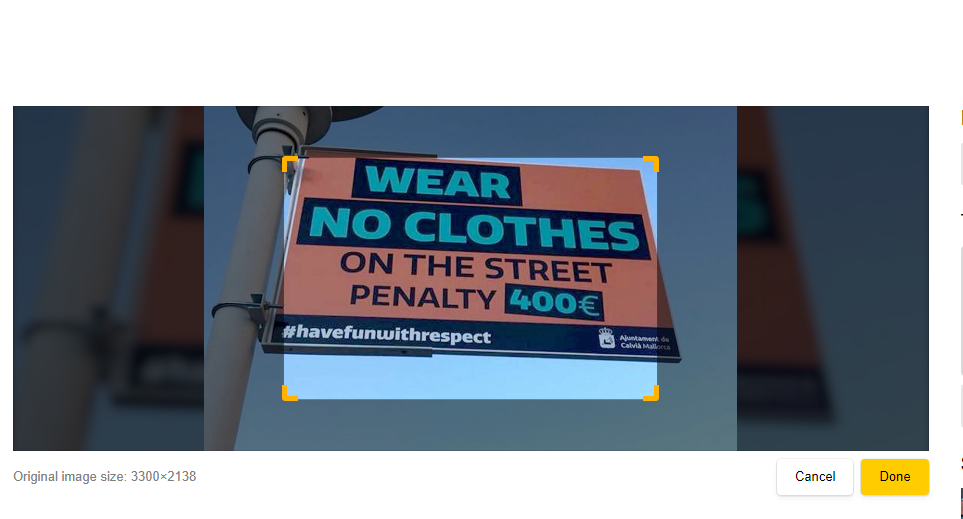

To identify photomontages, we can use what is called a reverse image search. There are several browser extensions and several online tools but our preference will go to Yandex.

Indeed, the reverse image tool of Yandex is the most efficient for image analysis and also allows to isolate parts of the image.

So, if your head is used in a photomontage, with the selection tool, you can isolate this element and ask Yandex to dig out the results to bring up all the pages where it appears.

The only limit of this tool as of all the others: it cannot go beyond what is indexed. If an insulting photomontage is exchanged via private messages, which are not indexed on search engines, Yandex will not be able to find results. The problem is the same for Discord because it is not a tool that allows its content to be indexed by search engines.

If you prefer to use a browser extension, we recommend Search By Image. Install it and when you see an image that strikes you, right-click, then "Search by image" and "All search engine".

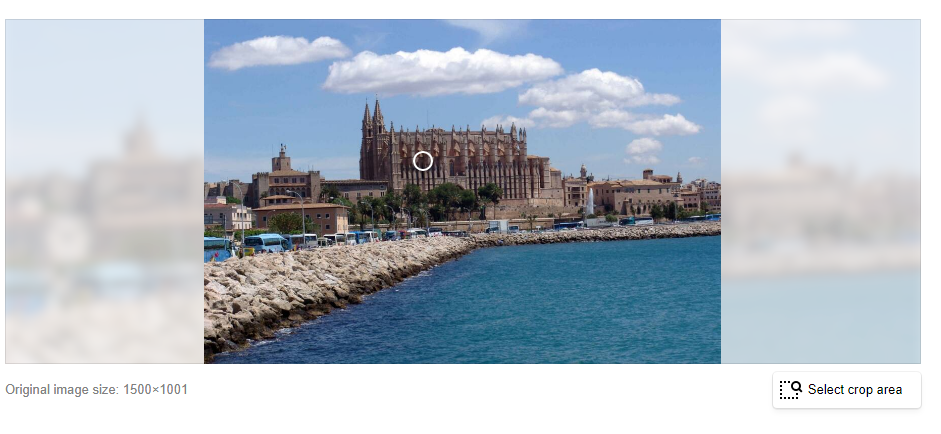

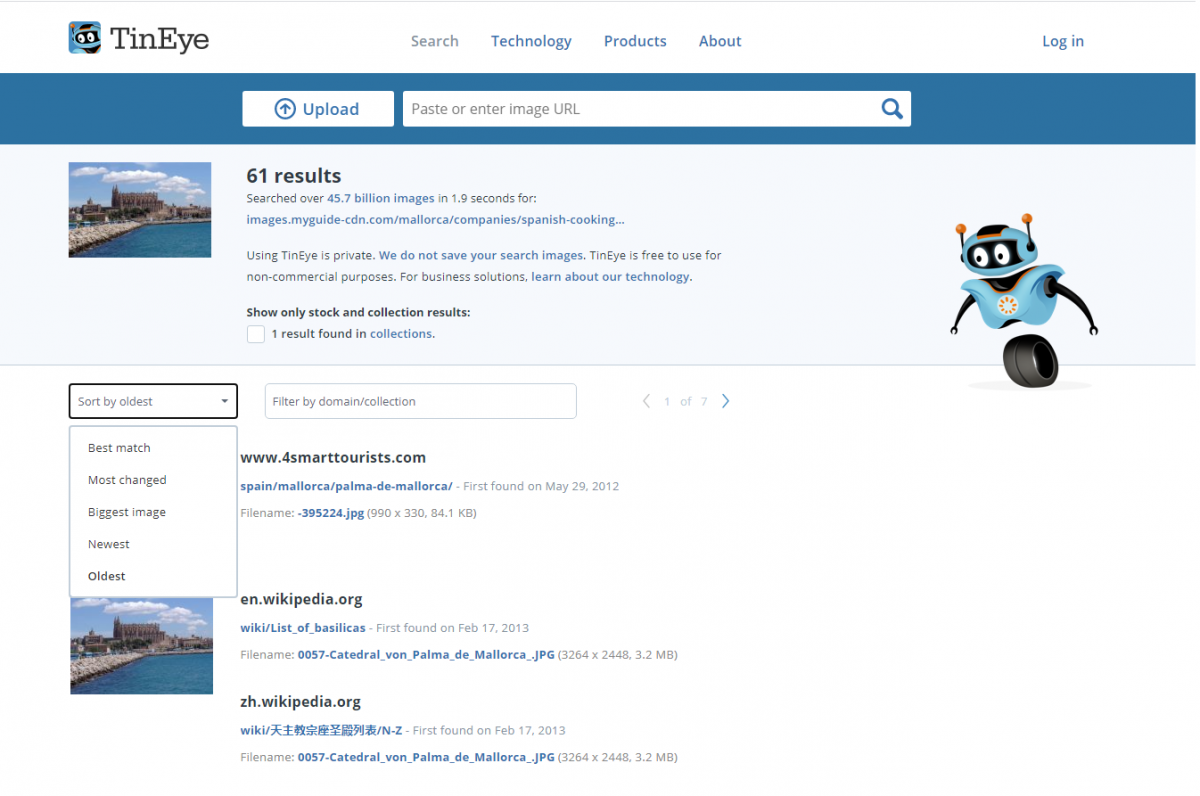

Finally, if you want to know when an image has been indexed on the Web, you can use TinEye. You can upload an image or give it a URL and then review the results.

Filthy photomontages, especially of a pornographic nature

In this case, we talk about pictures for which we have a doubt : is this picture authentic, real ? Moderation regarding pornographic content varies from platform to platform. On Facebook, moderation is very severe, to the point that photos of works of art have been removed without warning. On Twitter, there is no moderation on this subject. According to its terms and conditions, Instagram does not accept pornographic content.

For these platforms, it is enough to report them to the moderation so that the images disappear. Nevertheless, make sure to list links, times and profiles, either by taking screenshots with Fireshot or with Single File. Refer to the article on digital raids.

For moderation, we refer the reader to the previous article on doxxing.

To spot the photo edits circulating on the forums, again, you can use the reverse image. If you want to prove that the pictures that are circulating are not authentic, you can use online tools to show that it is a montage.

Unfortunately, there is no magic tool that is 100% reliable on this point. Nevertheless, there is a solution: Foto Forensics. Purists will tell you that we go beyond the strict framework of OSINT. The answer will be that IT is by nature a cross-disciplinary subject.

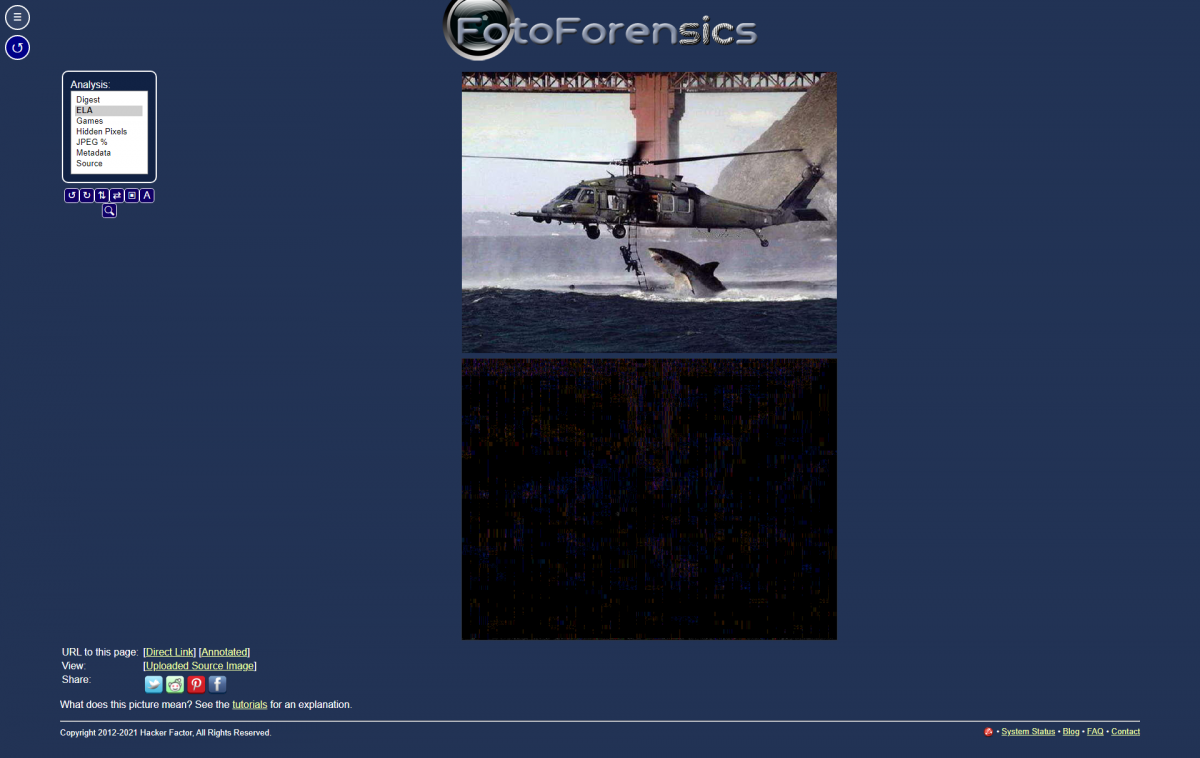

In Foto Forensics, we indicate the link of the image we want to analyze or we upload a photo. To help you understand how it works, here is the result of an image that has not been retouched or even cropped at all.

Here is the result of an image that has been retouched.

On the first photo, all the elements appear clearly in the analysis, including the text of the panel. On the second image, we see the bridge, the slope of the mountain, which are almost uniform. In the edited photo, the difference in the level of compression appears quite clearly.

It may be of interest to prove that a photo has been edited, especially if the victim is presented with obscene gestures or behind filthy contents, etc.

Video editing and deepfakes

A deepfake is a very sophisticated video trick. The goal is to mislead people into believing that this or that individual has made derogatory comments. At the moment, few people have the technical ability to make credible deepfakes. Unfortunately, the targets have not changed: politicians, celebrities, and generally women.

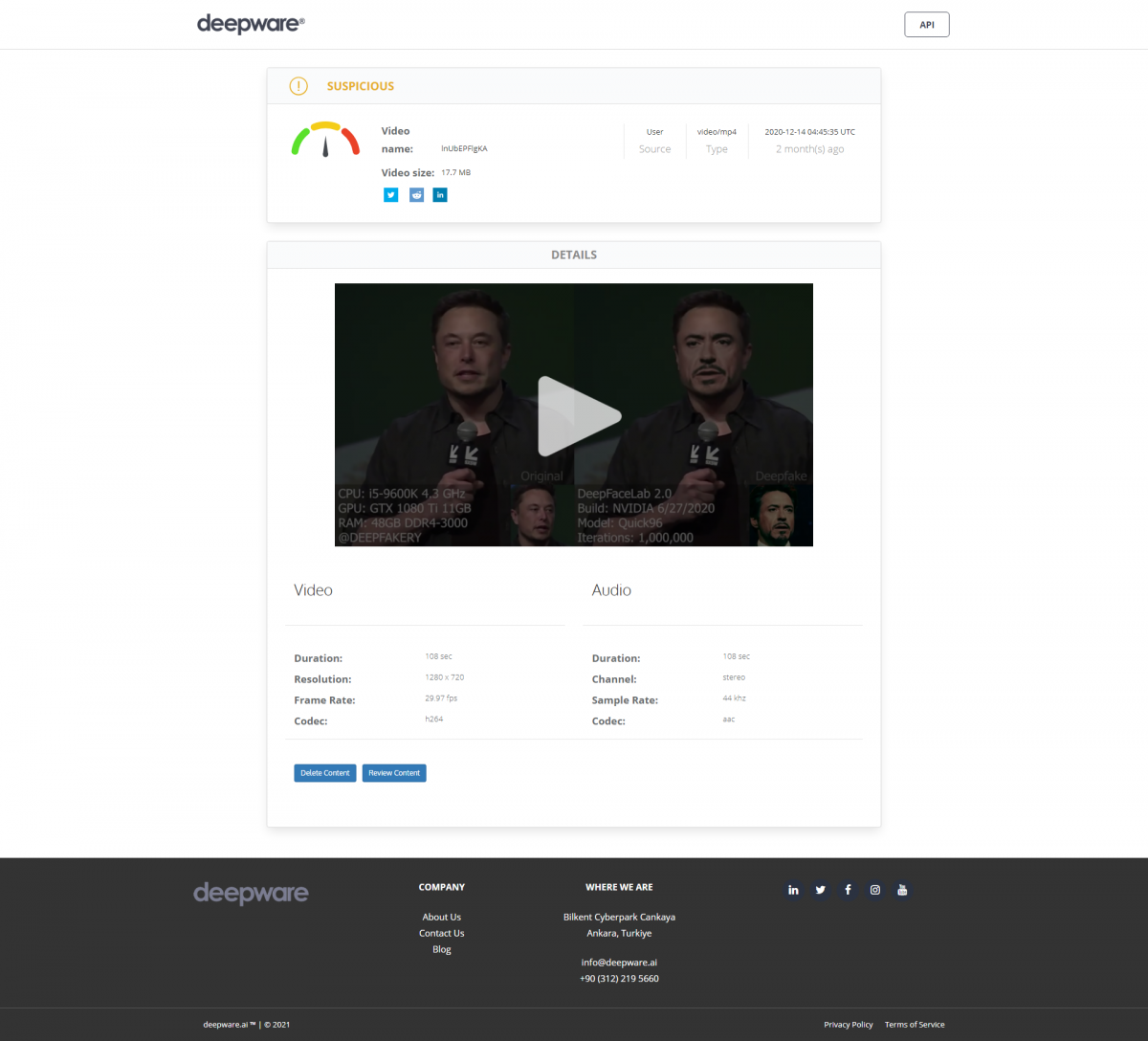

Deepware is a tool that allows you to locate deepfakes. It is very easy to use: load the video or indicate a link. After analysis, a cursor indicates the level of reliability of the video. For the moment, it is the easiest one to access.

For the victims, the difficulty will be in the detection of pornographic deepfakes. What to do if a person has made a pornographic video trick and shared it on adult sites such as YouPorn or PornHub? The reverse image technique works partially. For the purposes of this experiment, we went to an adult site. This site has no special protection, it is free to access. We launched a random video and made a screenshot of an actress. By uploading the image into Yandex, 6 results came up. These are the sites where this video was uploaded. But the original site was not detected by Yandex.

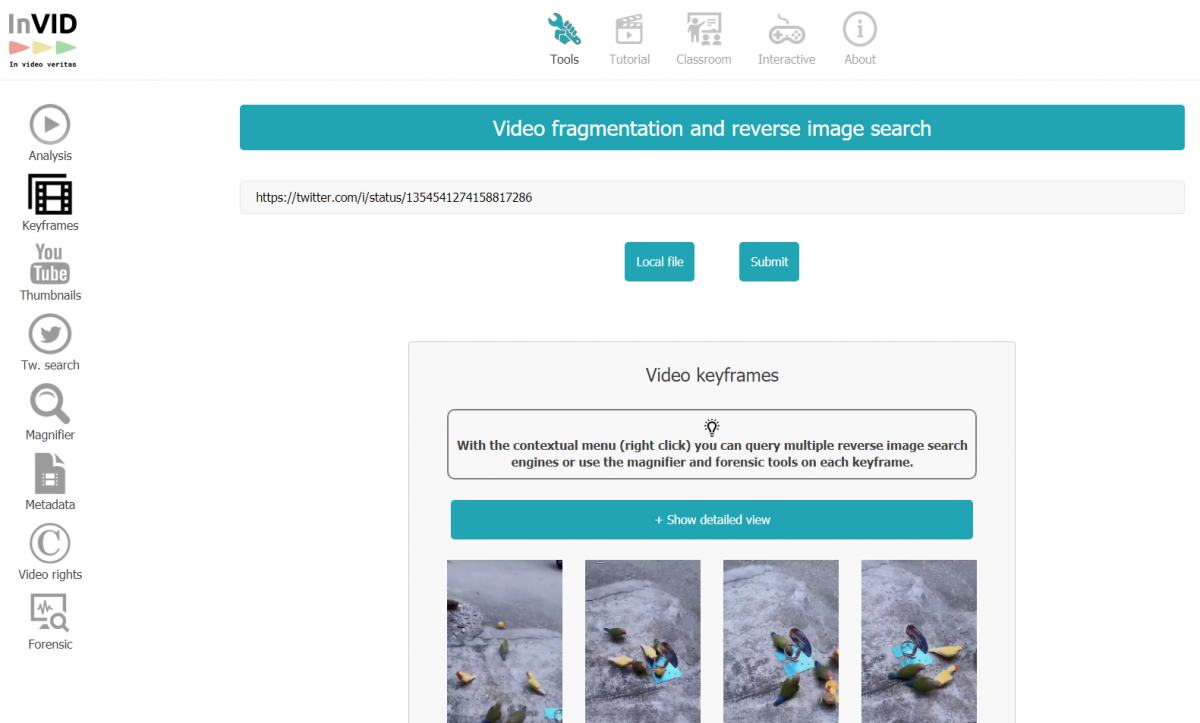

We can also try to use this extension - Fake News debunker - which will analyze the videos. Initially dedicated to journalism, it can be useful for other types of video because it will do analysis by image.

In our example, you used a video contained in a tweet.

You open the extension, choose "Open InVid". We select "Keyframes". By analyzing frame by frame, it knows how to recover some information. This may seem rudimentary and tedious, but there is no shortage of innovations in this field.

Does this mean that nothing can be done about it? Not exactly.

The legal bazooka

Concerning vulgar or filthy photomontages, it is up to you to see what you want to do. We will simply recommend that you archive everything you can. Do not hesitate to consult a lawyer. If you wish to sue or file a complaint against the authors, they will tell you the procedure.

For pornographic photomontages, here again, it is up to you to see what you want to do against platforms and sites that are not reactive enough.

What can be done legally against video edits? It will be developed in another post but the victims have a major asset in their game: the DMCA notice. It is the absolute gold standard in terms of content removal from the Web. With the exception of sites on the Darknet/Darkweb, all online services are inundated by DMCA notices, to the point that they very quickly remove anything that could be considered a copyright infringement. Finally, you should be aware that many platforms bury their heads in the sand in front of individuals, but suddenly become more cooperative when faced with requests from lawyers.

Ajouter un commentaire